Carbon dioxide (CO2) flooding is a process whereby carbon dioxide is injected into an oil reservoir in order to increase output when extracting oil.

When a reservoir’s pressure is depleted through primary and secondary production, Carbon Dioxide flooding can be an ideal tertiary recovery method. It is particularly effective in reservoirs deeper than 2,000 ft., where CO2 will be in a supercritical state, with API oil gravity greater than 22–25º and remaining oil saturations greater than 20%. It should also be noted that Carbon dioxide flooding is not effected by the lithology of the reservoir area but simply by the reservoir characteristics. Carbon dioxide flooding works on the premise that by injecting CO2 into the reservoir, the viscosity of any hydrocarbon will be reduced and hence will be easier to sweep to the production well.

If a well has been produced before and has been designated suitable for CO2 flooding, the first thing to do is to restore the pressure within the reservoir to one suitable for production. This is done by injecting water (with the production well shut off) which will restore pressure within the reservoir to a suitable pressure for CO2 flooding. Once the reservoir is at this pressure, the next step is to inject the CO2 into the same injection wells used to restore pressure. The CO2 gas is forced into the reservoir and is required to come into contact with the oil. This creates this miscible zone that can be moved easier to the production well. Normally the CO2 injection is alternated with more water injection and the water acts to sweep the oil towards the production zone. The Weyburn oil field is a famous example where this method is applied in financially interesting conditions.

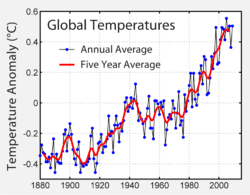

CO2 flooding is the second most common tertiary recovery technique and is use in facilities around the world. In the scope of global warming, it is an available method to curb CO2 emissions.

From http://en.wikipedia.org/

When a reservoir’s pressure is depleted through primary and secondary production, Carbon Dioxide flooding can be an ideal tertiary recovery method. It is particularly effective in reservoirs deeper than 2,000 ft., where CO2 will be in a supercritical state, with API oil gravity greater than 22–25º and remaining oil saturations greater than 20%. It should also be noted that Carbon dioxide flooding is not effected by the lithology of the reservoir area but simply by the reservoir characteristics. Carbon dioxide flooding works on the premise that by injecting CO2 into the reservoir, the viscosity of any hydrocarbon will be reduced and hence will be easier to sweep to the production well.

If a well has been produced before and has been designated suitable for CO2 flooding, the first thing to do is to restore the pressure within the reservoir to one suitable for production. This is done by injecting water (with the production well shut off) which will restore pressure within the reservoir to a suitable pressure for CO2 flooding. Once the reservoir is at this pressure, the next step is to inject the CO2 into the same injection wells used to restore pressure. The CO2 gas is forced into the reservoir and is required to come into contact with the oil. This creates this miscible zone that can be moved easier to the production well. Normally the CO2 injection is alternated with more water injection and the water acts to sweep the oil towards the production zone. The Weyburn oil field is a famous example where this method is applied in financially interesting conditions.

CO2 flooding is the second most common tertiary recovery technique and is use in facilities around the world. In the scope of global warming, it is an available method to curb CO2 emissions.

From http://en.wikipedia.org/